TurtleBot3: First explorations

I’m done assembling my TurtleBot3. I’ve already introduced it and covered some minor hardware and software issues, so I’ll jump into some fun stuff.

LDS (LASER Distance Sensor)

One of the coolest features of the TurtleBot3 Burger is the LASER Distance Sensor (I guess it could also be called a LiDAR or a LASER scanner). This is the component that enables us to do Simultaneous Localization and Mapping (SLAM) with a TurtleBot3.

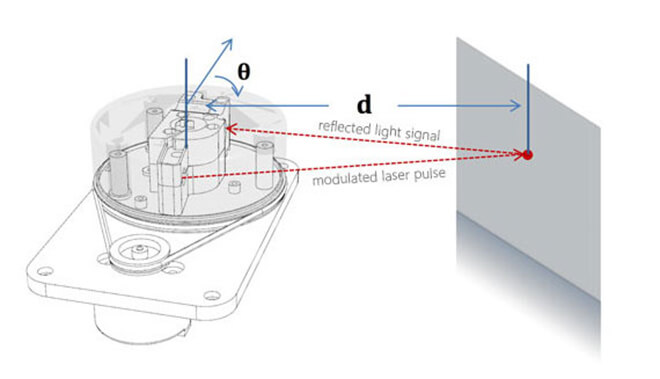

The LDS emits a modulated infrared laser while fully rotating. The sensor processes the returning signal and extracts angle and distance. Here’s a sketch I found on Roboshop for another LiDAR model:

Sketch for RPLIDAR 360° Laser Scanner

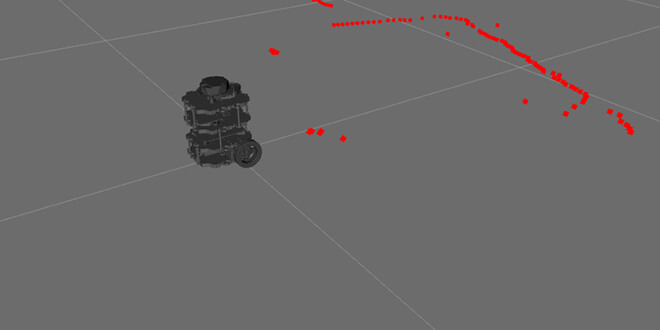

And here’s what the actual TurtleBot3 LDS look like:

HLS-LFCD2

And just to show you how cool it looks, here’s a short video:

Teleoperation

One of the first things one can do with a TurtleBot3 is to teleoperate it. It works fine with a keyboard (a bit like old school gaming) but I went ahead and got a PS3 controller (ROBOTIS RC100, XBOX 360, and many others are also supported). It is a bit easier to control linear and angular velocity this way.

I love having a PS3 controller at home, but no game system.

Visualizing location with rviz

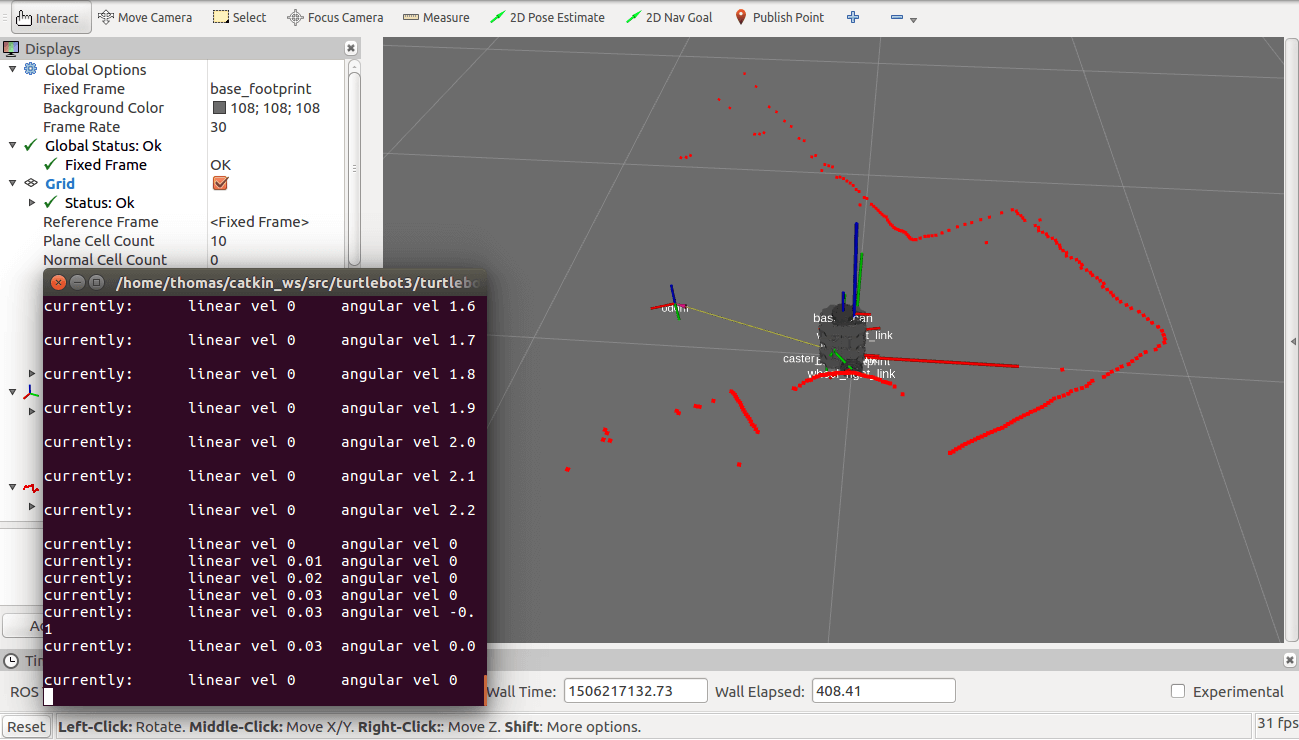

It’s fun to drive the robot around, but it’s so much cooler to see it discover its surrounding environment (through the LDS) as it’s going. In order to do this, we use rviz, a 3D visualizer for the Robot Operating System (ROS) framework.

The 3D model for the Burger has already been built and with a couple of lines in the terminal, the robot appears in 3D space:

TurtleBot3 3D model in rviz “seeing” my living room.

Next: SLAM

The next step for me is to actually do some SLAM using the gmapping ROS package, and save the map that is generated for navigation.

I leave you with a recording of my recent exploration of my dining room. Note how the plane sensed by the LDS is obviously not at floor level, but at “eye” level. Small details…